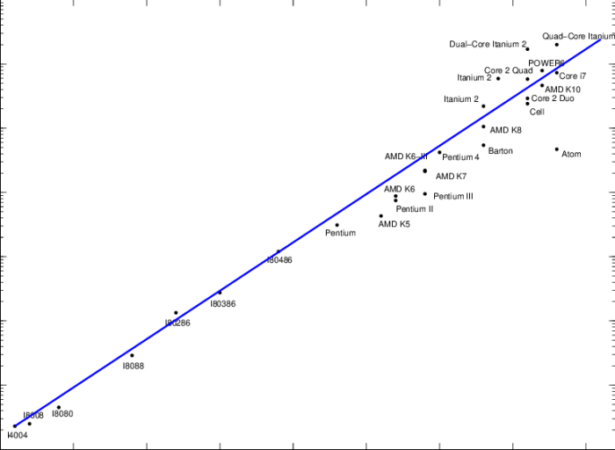

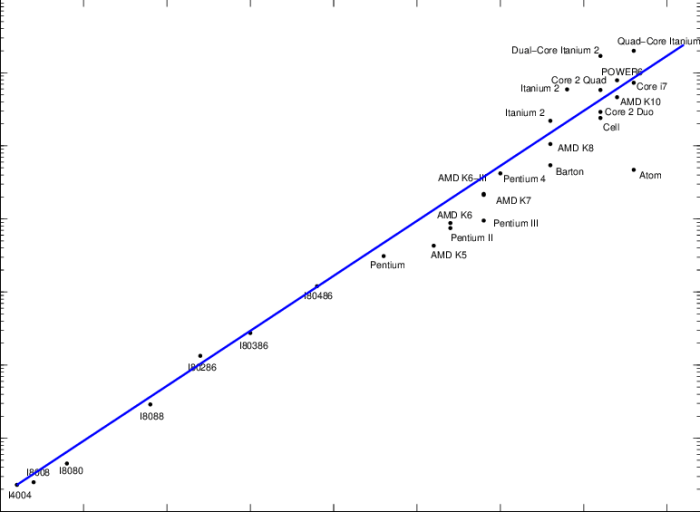

What is Moore’s Law? It’s a prediction, made in 1965 by Intel co-founder Gordon Moore, that the number of transistors that can be placed on a microchip doubles approximately every two years. This seemingly simple statement has revolutionized the world of technology, driving innovation and pushing the boundaries of what’s possible.

Moore’s Law has been a driving force behind the incredible advancements in computing power we’ve witnessed over the past decades. From the bulky computers of the past to the powerful smartphones we carry in our pockets today, Moore’s Law has enabled us to access information, communicate, and create in ways that were once unimaginable.

The Future of Computing

Moore’s Law has been a driving force behind the exponential growth of computing power for decades. However, as transistors continue to shrink, we are approaching the physical limits of silicon-based technology. This raises a fundamental question: what will happen to computing beyond Moore’s Law?

The Future of Computing Beyond Moore’s Law

The future of computing beyond Moore’s Law is likely to be driven by a combination of approaches, including:

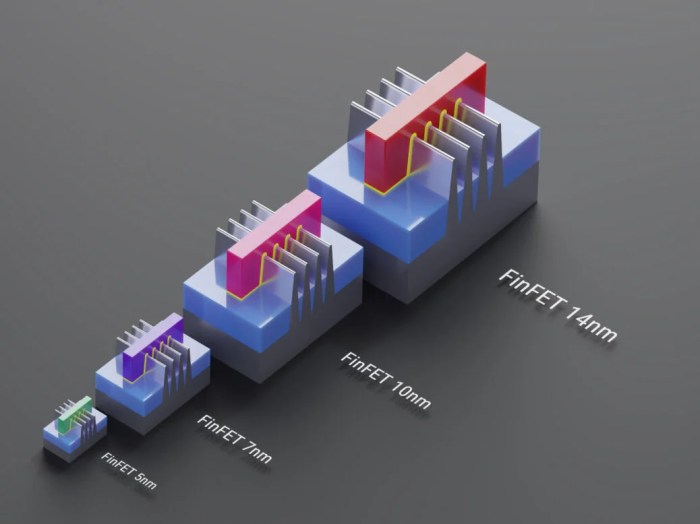

- Continued miniaturization: While traditional silicon-based transistors may be approaching their physical limits, there are still opportunities for miniaturization using alternative materials and architectures. For example, researchers are exploring the use of carbon nanotubes, graphene, and other materials to create smaller and more efficient transistors.

- Three-dimensional (3D) integration: Stacking multiple layers of chips on top of each other can increase density and performance without shrinking individual transistors. This approach is already being used in some advanced processors.

- New computing paradigms: Beyond traditional von Neumann architecture, new computing paradigms are emerging, such as neuromorphic computing, which mimics the structure and function of the human brain. These paradigms offer the potential for more efficient and powerful computing, particularly for tasks that are difficult for conventional computers, such as image recognition and natural language processing.

- Quantum computing: Quantum computing leverages the principles of quantum mechanics to perform calculations that are impossible for classical computers. This technology has the potential to revolutionize fields such as drug discovery, materials science, and artificial intelligence.

Quantum Computing

Quantum computing utilizes the principles of quantum mechanics to perform calculations that are impossible for classical computers. This technology relies on qubits, which can exist in multiple states simultaneously, unlike classical bits that can only be 0 or 1. This allows quantum computers to perform calculations exponentially faster than classical computers for specific types of problems.

Potential Benefits of Quantum Computing

- Drug discovery: Quantum computers can be used to simulate complex molecular interactions, which can accelerate the process of drug discovery and development.

- Materials science: Quantum simulations can help researchers design new materials with desired properties, such as high conductivity or strength.

- Artificial intelligence: Quantum computing can enhance machine learning algorithms, enabling the development of more powerful and efficient AI systems.

- Financial modeling: Quantum computers can be used to optimize financial models and make more accurate predictions.

Potential Drawbacks of Quantum Computing

- Technical challenges: Building and operating quantum computers is extremely challenging, requiring specialized equipment and expertise.

- Limited applicability: While quantum computers offer significant advantages for specific types of problems, they are not a replacement for classical computers for all tasks.

- High cost: Quantum computing is currently very expensive, limiting its accessibility to large companies and research institutions.

Comparison of Future Computing Approaches, What is moore’s law

The following table compares the potential benefits and drawbacks of different future computing approaches:

| Approach | Potential Benefits | Potential Drawbacks |

|---|---|---|

| Continued miniaturization | Increased performance and efficiency, reduced cost | Physical limitations, potential for increased heat generation |

| 3D integration | Increased density and performance, reduced power consumption | Complex manufacturing processes, potential for reliability issues |

| Neuromorphic computing | Improved efficiency for tasks like image recognition and natural language processing | Still in early stages of development, limited scalability |

| Quantum computing | Exponential speedup for specific types of problems, potential to revolutionize various fields | Technical challenges, limited applicability, high cost |

Ending Remarks

While Moore’s Law has faced challenges in recent years, its impact on the world is undeniable. Its influence continues to be felt in various industries, and its legacy will likely shape the future of technology for years to come. As we look ahead, we can expect continued innovation and advancements in computing, fueled by the principles laid out by Moore’s Law and the pursuit of ever-increasing processing power.

Question & Answer Hub: What Is Moore’s Law

What is the significance of Moore’s Law?

Moore’s Law has driven the exponential growth of computing power, leading to smaller, faster, and more affordable devices. This has revolutionized many industries and aspects of our lives.

Is Moore’s Law still relevant today?

While the pace of transistor density growth has slowed, Moore’s Law remains a guiding principle for the industry. It has influenced research and development in areas like quantum computing and alternative technologies.

What are some of the challenges facing Moore’s Law?

Challenges include the physical limitations of silicon-based transistors, the increasing cost of manufacturing, and the growing energy consumption of computing devices.

What is the future of computing beyond Moore’s Law?

The future holds exciting possibilities, with advancements in quantum computing, neuromorphic computing, and other emerging technologies promising to overcome the limitations of traditional computing.